There are two major trends coming together in the realm of cyber threats: 1) generative AI applications are redefining how we engage with each other and our environments, and 2) threat actors are abusing these same generative AI applications that are creating new positive possibilities to manipulate them for malicious purposes. (Check out our last Threat Spotlight: Generative AI, to learn more!)

What does being at the meeting point of these two technological shifts look like?

Generative AI + Commodification of Cybercrime

In the backdrop of these two trends is the overarching development of the commodification of cybercrime: malicious actors are finding success in adapting the “as a Service” model for buying and selling ransomware, malware, infected devices, stolen user data, and more. This also facilitates further specialization: some cybercriminals are experts in building malware and attack infrastructure, and they sell it to others who then carry out the attacks.

Large language models (LLMs) like ChatGPT and GPT-4 have certainly created a lot of buzz over the past few months. This level of attention and curiosity also translate to the illicit realms of the internet, as malicious actors discuss not only what those tools can do for them now, but also how to tamper with them to better suit their nefarious uses.

Tools can be divided into roughly three camps. First there are open source tools (such as the source code for LLaMA, which was stolen from Meta). Then there are tools that are publicly accessible such as Microsoft’s Bing, OpenAI’s GPT-4, and Google’s Bard. Finally it is likely that many of the leading industry players have proprietary tools such as Google’s LaMDA.

There are several approaches to removing harmful outputs: 1) make changes to the training data set, 2) make changes through reinforced learning from human feedback (RHLF), and/or 3) screen the model inputs and outputs to filter based on keyword matches and prevent model outputs that contain harmful content based on semantic matching. There is no easy fix to completely removing harmful results, so there are mixed results in successfully controlling this.

Though larger-scale adoption of ChatGPT is still in the early stages, according to a recent IT Pro article, social engineering attacks incorporating generative AI have already soared by 135%. Our observations of dark web chatter from threat actors about harnessing generative AI models for phishing emails, disinformation campaigns, voice spoofing, and more support these findings. This alarming trend is further magnified as malicious actors try to further overcome current limitations set in place for safety by AI platforms, such as bypassing paid subscriptions and “jailbreaking” the tool.

Misusing Generative AI Further by Bypassing Safeguards

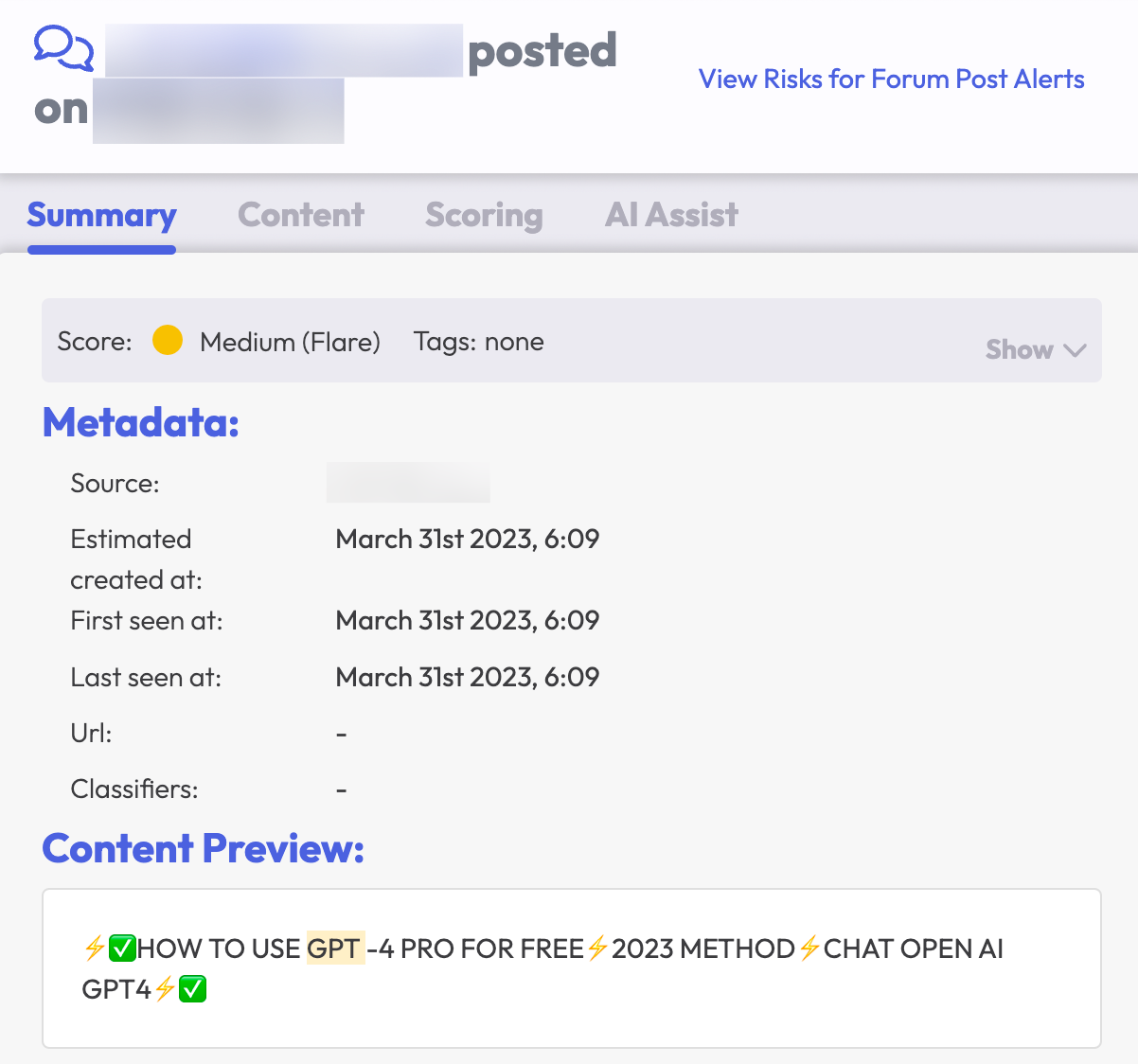

Threat actors continuously seek ways to further exploit tools available to them. For example, they seek out methods of bypassing GPT-4’s paid subscription model to use the services for free, and also sell that unauthorized access method to other cybercriminals. Below, a threat actor advertises free access to GPT-4.

As mentioned earlier, the AI application developers do work to prevent detrimental and illegal use cases, but malicious actors are racing to overcome them. “Jailbreaking” the model can allow them to bypass such limitations and controls.

By circumventing restrictions and leveraging the model’s extraordinary linguistic abilities, cybercriminals are able to enhance their illicit operations and wreak havoc on an unprecedented scale, highlighting the urgent need for increased vigilance and regulatory oversight.

![A dark web forum message screenshot from Flare. The background is a light gray with black text. The Content Preview shows: [FREE] access to chatgpt-4 (playground based) + NEW jailbreak](https://flare.io/wp-content/uploads/GPT-free-and-jailbreak_scrubbed.png)

These are simply a few snapshots that indicate how threat actors are warping generative AI, an innovation that could bring many benefits. There’s more happening each day as the technology continues to evolve.

Generative AI and Flare: How We Can Help

We’ve shared a few examples of malicious actors sharing their tactics that attempt to surpass the current infrastructure of generative AI tools. Though the misuse is undoubtedly concerning, LLM capabilities represent great resourcefulness and immense positive potential. It’s our shared responsibility to use them for the benefit and protection of the digital environment.

At Flare, we view incorporating generative AI into cyber threat intelligence as a necessary way to grow our capabilities alongside emerging technology. By integrating LLMs into cyber threat intelligence, we can enhance their essential features, allowing for swifter and more precise threat evaluation.

Flare’s AI Powered Assistant can:

- Automatically translate & contextualize dark web and illicit Telegram channel posts

- Deliver actionable insights into technical exposure

- Detect and prioritize infected devices

Take a look at how Flare’s AI Powered Assistant works alongside your cyber team to level up your security operations.