This article was updated on November 14th, 2025.

ChatGPT and GPT-5 have been dazzling their users with their linguistic prowess for the past few years. But have you ever wondered if (and how) these large language models (LLMs) are also making a splash in the darker corners of the internet? It turns out that threat actors are indeed finding creative ways to exploit the technological marvels of generative AI, and have been for some time.

Early misuse of ChatGPT and similar large language models mostly involved users trying to coax AI into performing a single prohibited task, such as writing malicious code or explaining drug synthesis. (Pro tip: ChatGPT is not a trustworthy chemistry partner. Don’t take pharmaceutical advice from AI.)

However, in 2025 the threat landscape has evolved well beyond simple prompt manipulation.

Is Generative AI Good at Cybercrime? Not Always

Let’s start with the good news: Generative AI does not seem very well suited to cybercrime — at least not the technical side of things. At Flare Research,we’ve found it’s not great at creating malware, exploiting networks, or finding vulnerabilities.

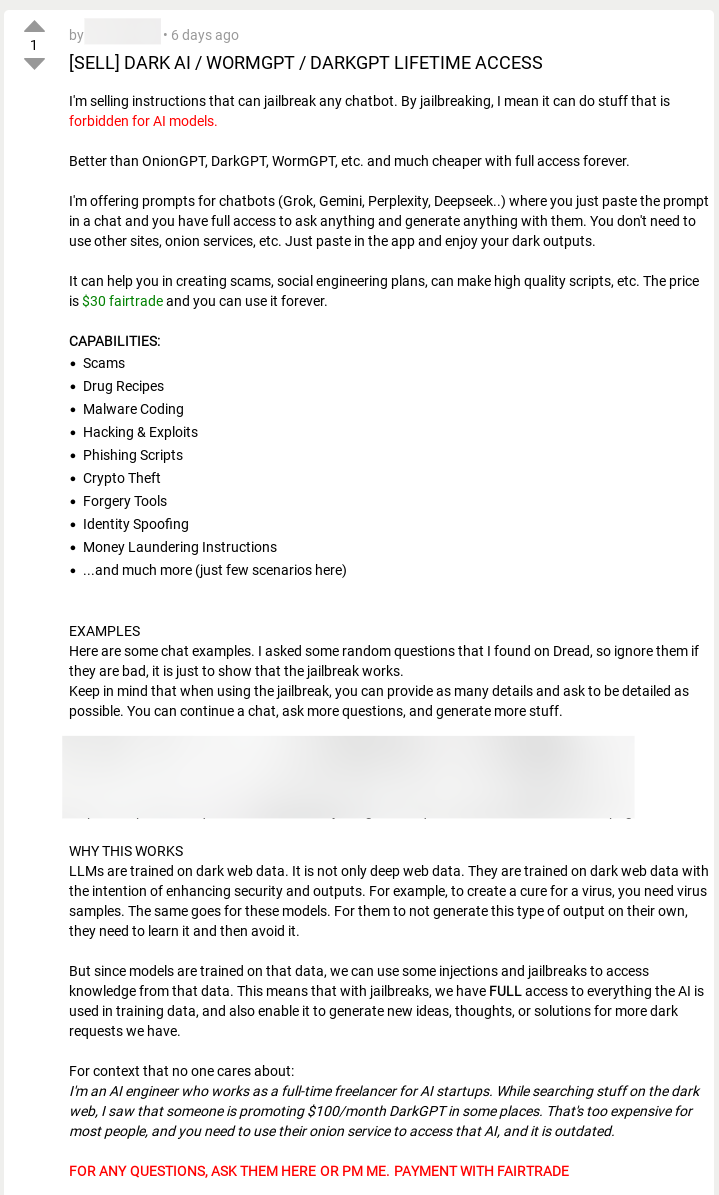

Legitimate LLMs also have safeguards built in to prevent threat actors from doing any of these things. As some ChatGPT users know, it can sometimes respond that it’s “only an AI language model trained by OpenAI” for even harmless requests. Bad actors have two choices here: they can either spend time engineering prompts to bypass filters, or they can build (or buy) a jailbreak that lets them use ChatGPT and newer large language models to write malware and perform other malicious tasks.

The Jailbreak-as-a-Service Market

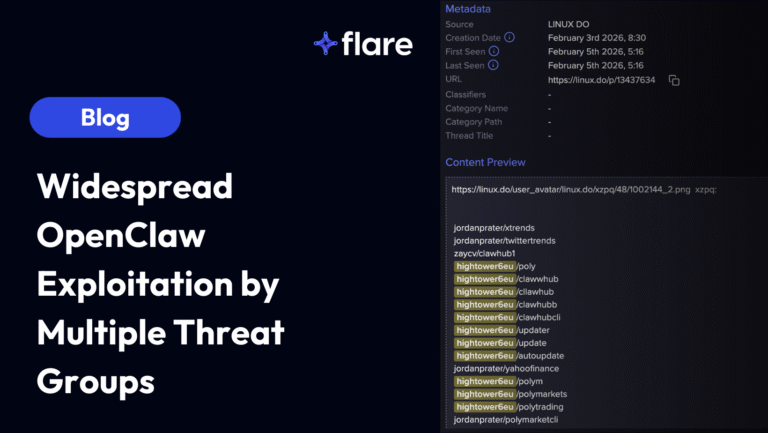

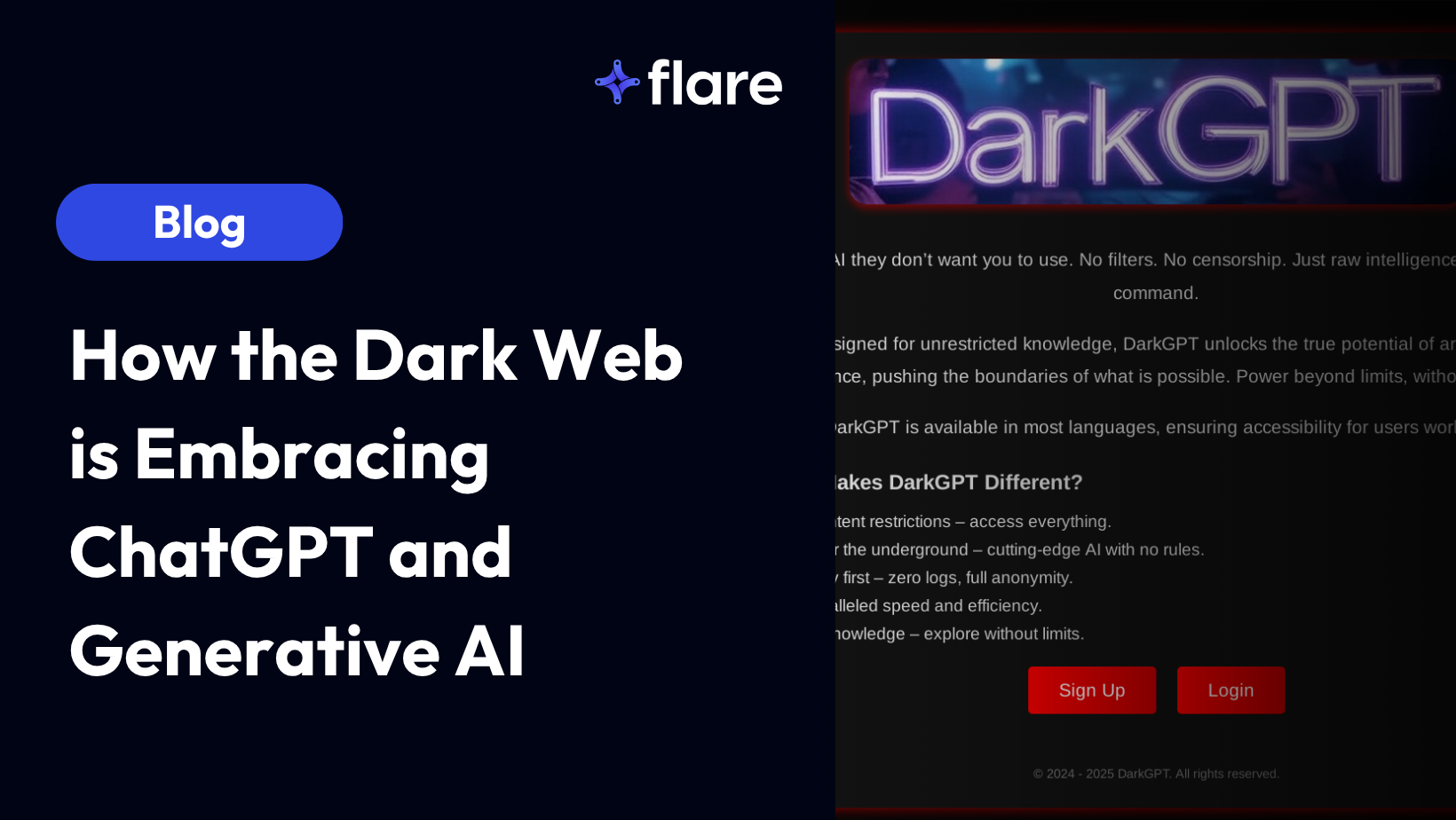

In the past few years, threat actors have been working to develop their own LLM versions: WormGPT, DarkGPT, and FraudGPT are all being marketed in the cybercrime economy as jailbroken GPTs (even if they’re not true GPTs) optimized for fraud and crime.

However, these LLMs (if they even are LLMs) aren’t very helpful. According to our research, reviews from users are filled with complaints and there doesn’t appear widespread AI of these models.

How is GenAI Being Used Effectively for Cybercrime?

Now for the bad news. There are some areas where AI can be used effectively in the cybercrime market, For example, it’s particularly good at content creation, and that can be used for social engineering, among other things.

Phishing Campaigns

AI is likely used most when it comes to creating more believable phishing messages, especially for people who aren’t fluent in a language. Considering that many threat actors come from the Russian-speaking world, this means that well-written, tailored messages in natural language are accessible to all criminals, regardless of their language skills.

AI is also very good at creating content with a sense of urgency. Writing a compelling call to action is a skill that has to be developed, and not all cybercriminals are naturally good at that. However, now AI can start drafting fake messages with content that makes unsuspecting victims click.

Spoofing via AI

Written messages aren’t safe from AI, and neither are voice calls.

Malicious actors appear to be devising strategies to exploit AI for voice spoofing scams. As we unfortunately anticipated, cybercriminals are harnessing text-to-speech AI algorithms to create convincing human-like voices, sometimes even imitating specific individuals. These realistic voices are then used to extract sensitive information, such as 2FA codes, from unsuspecting victims. As AI algorithms become more accessible and widespread, we can expect this trend to persist and escalate. This highlights the importance of remaining vigilant and raising awareness about the potential misuse of AI technology by threat actors of varying sophistication.

Agentic AI: The Next Phase of Cybercrime

Researchers and cybersecurity analysts are now observing the rise of agentic AI, large language models that act as autonomous agents, capable of planning, orchestrating, and executing multi-step operations with minimal human oversight. Instead of relying on human operators to guide every action, these systems can chain tasks together: identifying vulnerabilities, gathering intelligence, writing code, deploying payloads, and even covering their digital tracks.

A study from Carnegie Mellon University demonstrated that modified LLMs could autonomously plan and carry out simulated cyberattacks in realistic network environments, adapting their tactics as defenses changed. Cybercriminals on dark web forums are beginning to experiment with “autonomous red team” tools: frameworks that let users configure AI agents to perform reconnaissance, exploit automation, and phishing at scale.

This shift from prompt-based misuse to agent-based orchestration marks a turning point. Once an attacker defines a goal, an autonomous AI can handle much of the operational workflow, accelerating the speed and sophistication of attacks. As these technologies become more accessible, defenders will need to anticipate a new class of threats, not just from malicious prompts, but from self-directed AI systems capable of continuous, adaptive action.

Cybercrime and Gen AI: What does this mean?

As AI algorithms continue to evolve and gain prominence, it is crucial for both organizations and individuals to recognize the potential dangers they pose. Cybersecurity professionals must stay vigilant and constantly adapt their strategies to counter and stay ahead with the ever-changing threat landscape.

Meanwhile, individuals should exercise caution when encountering suspicious communications, even if it sounds authentic. Double-checking the source and avoiding sharing sensitive information could create obstacles with a threat actor’s cybercrime attempts.

Generative AI and Flare

The Flare Threat Exposure Management solution empowers organizations to proactively detect, prioritize, and mitigate the types of exposures commonly exploited by threat actors. Our platform automatically scans the clear & dark web and prominent threat actor communities 24/7 to discover unknown events, prioritize risks, and deliver actionable intelligence you can use instantly to improve security.

Your security team can take advantage of AI to get ahead of threat actors with Threat Flow:

Flare integrates into your security program in 30 minutes and often replaces several SaaS and open source tools. See what external threats are exposed for your organization by signing up for our free trial.