In today’s expanded attack surface, new technologies create new opportunities for businesses and malicious actors. Attackers can use the same artificial intelligence (AI) and large language models (LLMs) that companies use, often in the same way. In both cases, these technologies reduce the time spent on repetitive, manual tasks. For example, organizations may use LLMs, like ChatGPT, to write documentation faster. Similarly, malicious actors use these technologies to write more realistic and compelling phishing emails.

Where malicious actors historically needed sophisticated technical skills, new technologies reduce the criminal skills gap, enabling less technically experienced cybercriminals the ability to deploy attacks. To navigate the modern cyber threat landscape, offensive and defense security practitioners should understand how today’s malicious actors use new underground cybercriminal business models to deploy attacks more efficiently.

To watch the full webinar check out Navigating the Cyber Threat Landscape.

The Reality of Modern Credential Operations

The cybersecurity industry often focuses on sophisticated attacks, making people assume that most arise from nation state actors, zero day vulnerabilities, reverse engineering, or other paths that require a lot of skills. However, modern red teaming means looking at modern credential operations. When reviewing publicly available research, attacks that led to a breach often follow an order of operations that begin with methods requiring little technical skill.

As modern cybercrime increasingly becomes a business, attackers become more pragmatic, looking for an easy way to compromise corporate systems and networks. Adversaries are shifting their attacks left, frontloading campaigns with reconnaissance more than before. They need an incredibly small amount of information to compromise an organization’s critical assets, as long as they have the right information to get in and out quickly and easily. The proliferation of cloud-based infrastructure changes the logging landscape, especially as organizations have more applications connected to the public internet which increases the number of attack paths. Meanwhile, as organizations add more domains and subdomains, they often lose track of resources and their security reviews, or lack of review.

The Different Levels of Attack Methods

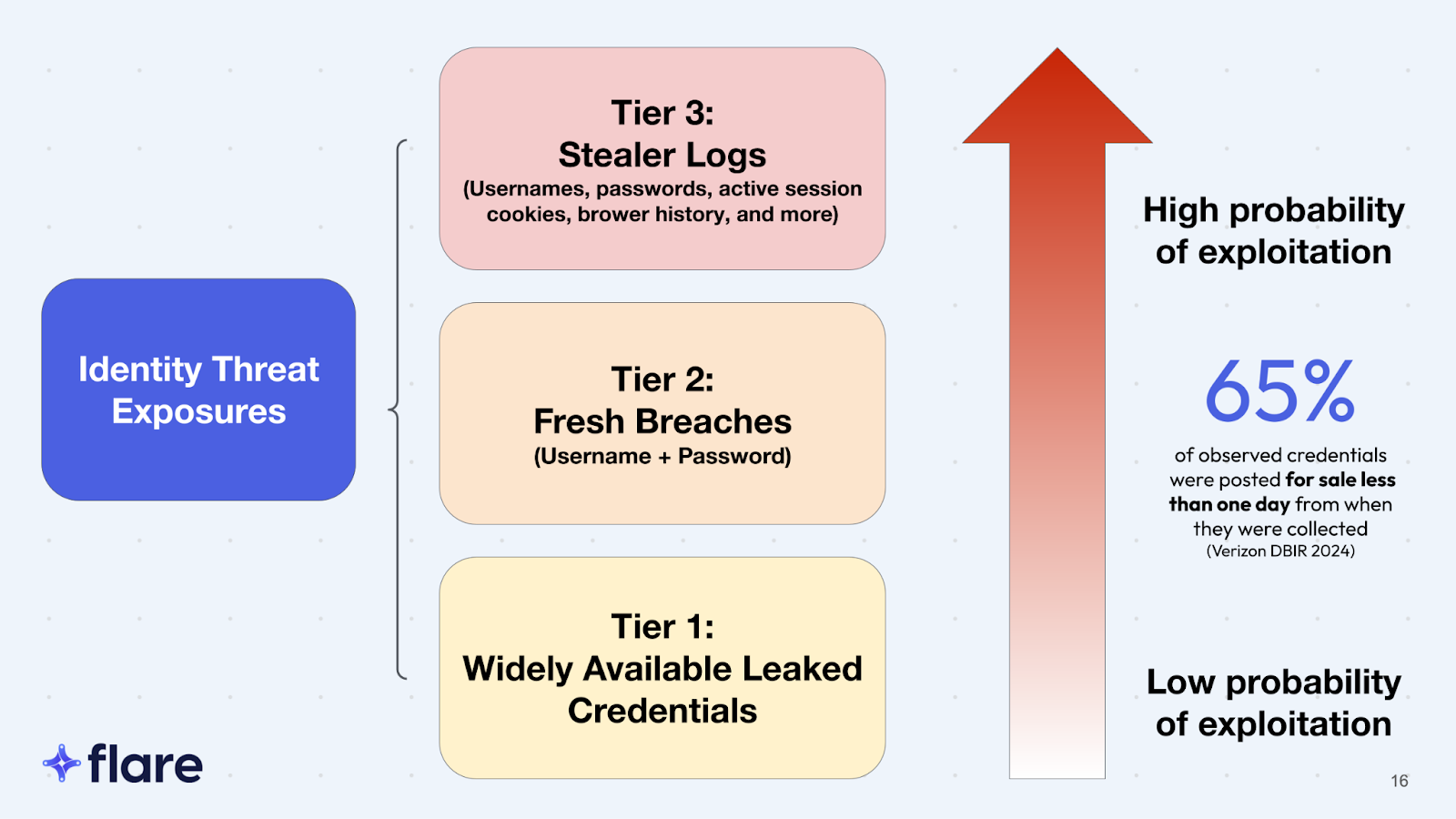

A good way to think about these different operations is leveling up in a video game.

In a video game, players start with the easiest skills to master then move through the game, “leveling up” as they add new capabilities. Attackers work similarly because the easiest to complete activities give them a higher return on investment. Thinking like a business, spending fewer hours on an attack means that the malicious actors make more money per hour from the illicit activity.

Looking at the levels, this financial “return on investment” mentality makes more sense.

- At Level 1, cybercriminals use leaked credentials available on the public web, like from Pastebin, torrent, dehashed, or GitHub. Although these may not be the freshest credentials, gathering them is a minimal time and financial resources expenditure.

- At Level 2, the attackers start using dark web forums channels.

- Level 3 brings them to the Ransomware-as-a-Service (RaaS) ecosystem while

These low barriers to entry attack methods remain the norm until Levels 7 to 9. At these higher levels, nation states and sophisticated attackers start spending the time and financial resources to identify new zero day attacks or engage in corporate espionage.

Identifying Trends

Additionally, many advanced persistent threats (APTs), like sophisticated ransomware groups, start with these low resource strategies for two main reasons.

First, log documentation often fails to identify these early attack methods. An organization can be logging from every on-premises and cloud source, but malicious actors can easily evade detection when they download stealer logs from Telegram to use an employee’s single sign-on (SSO) credentials. Event and system logs often fail to document Level 1 through Level 4 attack methods, meaning that the security incident and event management (SIEM) tools fail to detect them.

Second, these attack methods are extremely simple. A teenager with access to a YouTube tutorial can easily pull a pair of credentials off Telegram, meaning that the barrier to entry for cybercrime has lowered radically.

Breaking through the Noise

Organizations rely on the logs that their IT stack generates. However, cloud technologies, like cloud-hosted portals, produce high volumes of log, even for a mid-sized, standard organization. This noise increases the number of false alerts, increasing security analyst alert fatigue.

How Attackers Can Hide

If attackers purchase a web token or cookies from a Telegram group, the most likely detection exists at the system or browser level where someone configured a correlation rule to detect a change in location during the same session. To detect these attacks, organizations need to create specific rules based on the use cases which can become time-consuming and cost ineffective.

While the detections might capture a high-volume credential based attack, malicious actors can easily blend in a small credential stuffing or password spray in ways that hide within typical internet traffic metrics. Some large organizations may not be alerting on this level of sophistication while some smaller teams may have these capabilities.

Reducing the Power of the Cookie

While an organization might be able to detect malicious actors using stolen credentials, it is less likely to identify cybercriminals injecting cookies into a browser. Recognizing the ability to evade detection, cybercriminals increasingly purchase these stealer logs from the dark web or illicit Telegram channels.

Problematically for attackers, collecting and placing these stealer logs on the cybercriminal market takes at least 24 hours. To mitigate risk, organizations can implement a complete cookie refresh every 24 hours. However, this protection comes with increased end-user frustration and burdens by requiring them to go through the login and multi-factor authentication processes every day.

Security practitioners are likely to see new solutions that respond to users’ need for session continuation while mitigating these risks. For example, Google recently announced a new feature that seeks to bind authentication sessions to a user’s device, hoping to disrupt the cookie theft malware industry.

Automating Credential Operations

The 24-hour cookie theft attack lifecycle typically includes the following processes:

- Distributing malware

- Installing infostealer malware on an endpoint

- Extracting data

- Sending the stolen credentials to a command and control (C2) server

- Populating a back-end with the data, like session cookies or credential in the browser

- Uploading and selling the data on the dark web or an illicit Telegram channel

However, increasingly, threat actors automate some of these tasks, like using the Telegram API to populate their channels.

Additionally, this automation means that cybercriminal operations often collect, sell, and repackage credentials. Malicious actors might pay for early access to the credentials, but as the data becomes public, it often reaches a broader audience. With greater access to these credentials, commodity adversaries use scripts to pull the credentials and automate the attacks.

Initial Access Brokers in the Cyber Threat Supply Chain

Initial access brokers (IABs) are threat actors who parlay corporate access, like access to corporate networks, to ransomware affiliates and other groups who may want it. They establish initial access, then sell it in an auction-style format on three main forums:

- Exploit

- XSS

- Ramp

This subgroup of cybercriminals will often buy bulk stealer logs so that they can identify the ones with corporate access. Essentially, IABs engage in due diligence for other cybercriminals, vetting the data from the stealer logs and selling the instructions for how to deploy the attack.

In a modern threat landscape, credential operations and credential sales become one in the same. These attacks become less sophisticated, often lacking a structured C2. As offensive security teams emulate adversaries, they need to consider this new lack of sophistication so that the defenders can implement meaningful detections.

A Browser and A Dream

Software-as-a-Service (SaaS) applications modernize the attack surface, changing how cybercriminals attack organizations. Since logs often fail to record these attack methods, security teams need to update their monitoring and detection strategies.

For example, traditional penetration tests focused on getting domain administrator access. However, this approach likely triggers a detection. Modern attackers will break through an organization’s hard armor, gaining initial access to the VPN and the internal network so that they remain undetected.

As security teams work to proactively mitigate risk, they should consider the various ways that cybercriminals can exploit new technologies.

SaaS Applications

In 2023, Flare research found that at least 1.91% of stealer logs contained leaked corporate credentials for commonly used business applications, like Salesforce, Hubspot, AWS, Google Cloud Platform, Okta, and DocuSign. Additionally, more than 200,000 stealer logs contained access to OpenAI credentials, representing 1% of all analyzed data.

For offensive security teams, infrastructure mind-mapping tools, like Miro, can lead to data leaks when users add credentials to graphics with network diagrams. These SaaS platforms provide useful tools, but they often come with additional security risks like:

- Lack of robust logging

- Additional costs for enabling two-factor authentication or SSO

AI Models

Many companies train their models internally, typically using open-source software. These web applications connect to the internal LAN, but they often lack authentication, placing the internal data used to train the AI at risk.

AI Applications

As organizations implement AI applications, they may not be able to appropriately configure them. For example, some use blob storage or buckets for managing the log history of people’s prompts and the response data that search engines can index. These can expose credentials, similar to regular web application data leaks. For example, developers may paste secrets into code assist applications, exposing things like API tokens or credentials.

Data Exposure Beyond Credentials

As companies adopt cloud-delivered services, they need to consider additional data leakages that can impact their overarching security.

The Collaboration Tool Problem

With more organizations using cloud-native collaboration tools, corporate resource data leaks become increasingly important to cybersecurity. For example, internal users often share information using misconfigured file shares on web resources, like SharePoint directories or Google Drives. Once the user shares the file to “anyone with the link,” Google and other search engines can index them.

Malicious actors can do a search for:

- Domain: companyname.com

- File type: PDF, spreadsheet, XLSX

If the search engine has indexed the domain, then it can return documents that should otherwise be kept private. Collaboration suites like Google Docs and SharePoint use the companies Infrastructure-as-a-Service (IAAS) provider to host content, but they are also risky because users can control the sharing settings, leading to accidental data leaks.

To mitigate risks, organizations should disable public link sharing, requiring users to share with a specific identity to maintain the file’s security and data privacy.

The Open-Source Repository Problem

Increasingly, malicious actors have focused on publicly available code libraries and repositories. As companies adopt infrastructure-as-code (IAC), data leaks expand beyond the organization’s typical security monitoring.

For example, attackers can scan these open source libraries to identify data leaks like:

- Hard coded secrets: API keys or passwords included in source code

- Proprietary code: custom code accidentally uploaded to the Docker marketplace

- Files: documents or spreadsheets exposed in a Jenkins server

The Future of AI

As AI continues to mature, security practitioners will need to understand the use cases that make sense and the risks that the technology creates.

Breaking Bad

Offensive security practitioners have already been hard at work trying to and succeeding at breaking the current consumer models. Additionally, while few news stories exist, attackers do appear to be using AI effectively. Some examples include:

- Using voice cloning software to perpetrate fraud

- Selling models with full-blown ChatGPT, sophisticated chatbot interfaces

- Parsing info stealer data faster to more effectively apply it to campaigns

- Writing more realistic, convincing phishing emails

Bringing AI to the Table

For every criminal AI use case, a defender opportunity exists. AI can supercharge various areas of an organization’s security program by:

- Providing example code and explaining a vulnerability

- Creating scanners that identify issues

- Generating documentation and development guides

About Flare

The Flare Threat Exposure Management (TEM) solution empowers organizations to proactively detect, prioritize, and mitigate the types of exposures commonly exploited by threat actors. Our platform automatically scans the clear & dark web and illicit Telegram channels 24/7 to discover unknown events, prioritize risks, and deliver actionable intelligence you can use instantly to improve security.

Flare integrates into your security program in 30 minutes and often replaces several SaaS and open source tools.

Want to learn more about monitoring for relevant threats with Flare? Check out our free trial.