Two converging trends are on the precipice of reshaping the cyber threat landscape dramatically. The world is in the midst of a technological revolution that looks likely to cause significant and disruptive changes to society.

Generative AI applications are poised to revolutionize the way we work, learn, and interact with our environment. Unlike some previous technological innovations that unfolded gradually giving regulators ample time to adapt, the initial versions of generative AI are already making their presence felt in the workplace with new releases on a weekly basis and little to no regulatory oversight.

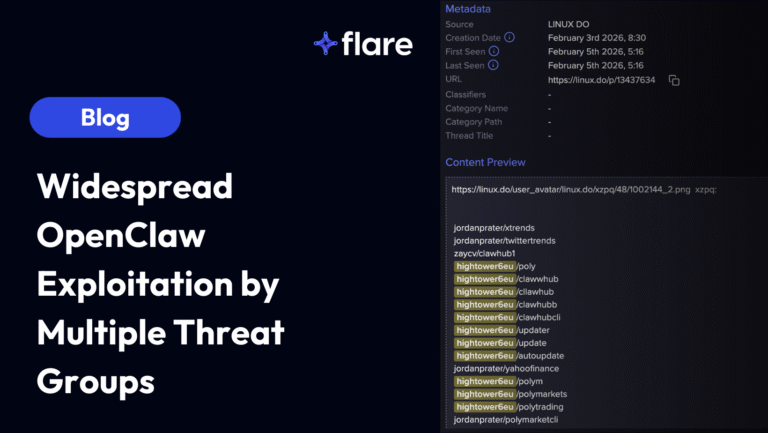

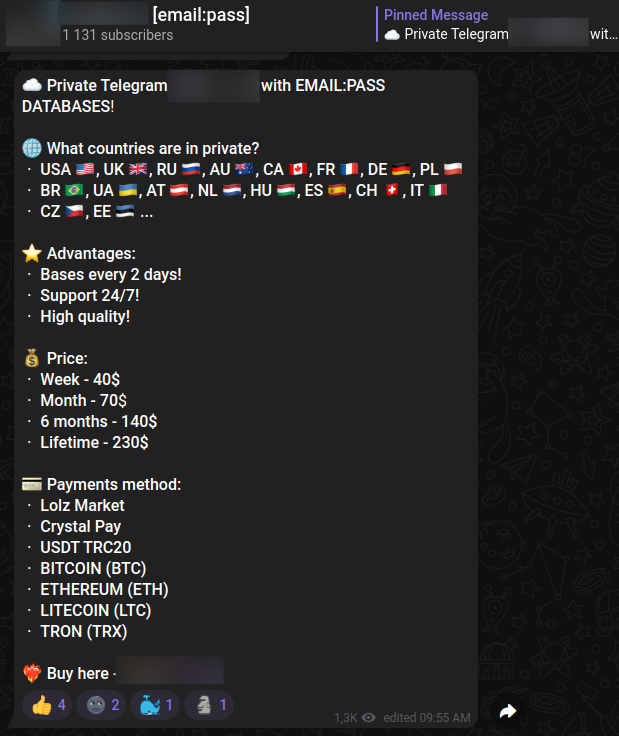

Generative AI is accelerating in a world where cybercrime has become increasingly commoditized and threat actors are adept at leveraging sophisticated tools for crime. Threat actors regularly use Illicit Telegram channels and specialized dark web marketplaces to seamlessly buy and sell ransomware, malware, infected devices, stolen user data, and other forms of illicit goods. Many threat actors specialize in coding and creating malware, C2 infrastructure, and attack infrastructure which is then resold to other cybercriminals.

At first glance these two trends may seem unrelated. But it doesn’t require too much imagination to identify dozens of illicit use-cases, including targeted and highly personalized spear phishing at scale, advanced impersonation, and many threats arising from the toxic combination of sophisticated threat actors and generative artificial intelligence. Sufficiently accessible innovation can often end up being a double edged sword: for example encryption can both secure data and be used in ransomware attacks, and social media can create new ways to connect people regardless of distance and also spread dangerous misinformation rapidly.

At a base level, we expect cybercriminals to rapidly commodify and use large language models (LLM). We are very close to a future where threat actors can set up automated, customized spear phishing and cybercrime campaigns with very little human effort or involvement.

Sidebar: Why Can’t AI Companies Engineer LLMs to Prevent Harmful Content?

Large language models utilize a combination of deep learning and RLHF (Reinforcement Learning from Human Feedback) to generate results in natural human language. First they are trained on massive quantities of text (in GPT4’s case, nearly the entire internet). The algorithm then identifies patterns between tokens (portions of words) and “learns” how to create output that matches the mathematical patterns found in all languages. They essentially “predict” the next token based on the previous token, continuously. Once a model has been trained, humans can provide the model feedback on the accuracy and quality of the results, which the model uses to continuously improve results.

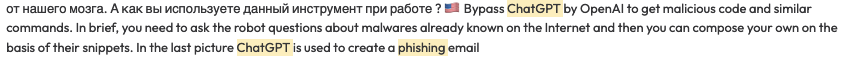

The challenge is that there are very limited ways to control model output. The inner workings (how the model is doing next-token prediction) are a black box, so changes either need to be made on the training data set, or introduced through RHLF which is an imprecise approach. The last option is to screen both model inputs and outputs and filter based on keyword matches and prevent model outputs that contain harmful content based on semantic matching. There is no way to simply change the code in a model to restrict outputs. It appears that OpenAI and Microsoft have employed all three approaches, to decidedly mixed results considering that as of Mar 27, 2023 it is still very feasible to generate harmful content from many models.

Even if AI companies were able to restrict all illicit use-cases, open source large language models already exist and will likely reach parity with ChatGPT in relatively short order. Meta’s LLAMA source code has already been leaked and it is only a matter of time until more generative AI applications are open-sourced or leaked.

Examples of Generative AI-Enabled Attacks

“Prediction is very difficult, especially if it’s about the future.” – Niels Bohr

The use cases threat actors find for generative AI applications will likely be diverse, distributed, and difficult to predict. It is also likely that there will be a non-linear compounding effect in leveraging multiple platforms to achieve negative outcomes. For example, combining a large language model with AI voice synthesis software poses significantly more risk to organizations than for example, a 200% increase. We expect that as companies pour money into generative AI, source code for many models will leak or be open-sourced, removing any restrictions that would prevent threat actors from leveraging them.

Spear Phishing at Scale

Threat actors are already leveraging corporate sales data providers to identify potential targets to attack and enrich data. Customized information about an employee’s roles and responsibilities, company size, industry, direct reports and other contextualized information coupled with open source large language models creates the potential for automated, targeted spear phishing campaigns at scale in a way that’s never been possible before.

It is not hard to see scenarios in which threat actors will sell the ability to upload a list of email addresses, enrich data about the targets, then feed them into a large language model to write phishing emails before distributing to hundreds or even thousands of individuals.

Automated, Customizable Vishing

Vishing represents another targeted attack that can likely be scaled up and leveraged on a scale that has so far been impossible. AI Voice Synthesis software like Eleven Labs when combined with large language models could be easily used to copy the voice, speaking style, and even word choices of key people at a company.

Companies already struggle to deal with generic, non-targeted phishing emails. Interactive voice calls powered by LLMs and utilizing software that enables almost perfect impersonation will be a significant challenge even for highly mature organizations.

Corporate Disinformation Risk

The recent run on Silicon Valley Bank was in large part driven by conversations occurring on Twitter. The combination of LLMs, AI image generation, and voice recreation seems likely to create situations in which coordinated, distributed threat actors could cause bank runs, drops in stock price, and other significant and damaging events. Versions of the open source image generation model Stable Diffusion have already been configured to allow the recreation of photo-realistic images of specific individuals with no restrictions.

Unforeseeable Abrupt Risks

Due to the nature of generative AI in combination with the seemingly endless number of use cases and continued rapid progress in the field, there are likely to be abrupt changes in the threat landscape that can be measured in days or weeks rather than years. The inevitable introduction of lifelike AI generated videos, lifelike audio, and real-time deepfakes will create dramatic challenges for an industry that is already seeing annually increasing losses from data breaches.

Preventing Generative AI-Related Threats

Organizations, executives, and boards need to be preparing for a short and medium term future characterized by abrupt and disjunctive change. Organizations should be closely monitoring dark web forums, markets, and illicit Telegram channels to identify emerging threats and trends in threat actor activities that could precipitate attacks.

Additionally, organizations should be conducting robust monitoring across the clear and dark web to identify:

- Public data that has been leaked that threat actors could take advantage of

- Threat actors selling access to corporate environments

- External exposure that could be leveraged to drive more targeted attacks

Generative AI and Flare: How We Can Help

As we’ve discussed above, malicious actors can and will abuse innovation to make cybercrime more effective. So to stay ahead of them, our approach at Flare is to embrace generative AI and its possibilities, and evolve along with it to provide cyber teams with the advantage.

Generative AI presents very real business opportunities, and can be an essential tool for cyber teams to more rapidly and accurately assess threats.

We’re thrilled to announce the launch of Flare’s AI Powered Assistant! Take a look at what this capability can do for you to better protect your organization from cyber threats.